Why Chatcontrol is useless and dangerous

We really need to talk about chatcontrol, the EU's next mass surveillance system. Long post, there are some hints to skip over parts of if it's too long for you. Sources are in [brackets] & linked at the end. I tried to simplify a bit to keep the post accessible for people without deep technical knowledge. I've packed a LOT of content into this post - if you have trouble undestanding, it's probably my fault. Ask me and I'll clarify!

What is Chatcontrol?

Chatcontrol is what critics call the regulation proposed by the european comission [L1]. The regulation aims to reduce the online distribution of Child Sexual Abuse Material (CSAM). It will force messaging services to scan ALL content, including personal messages and photos, to detect CSAM and report detections to a newly established EU centre. The centre will then coordinate with police in EU countries and provide access to detection technologies.

While I doubt anyone will disagree with the aim, the means (mass surveillance) are not only violating privacy rights but also ineffective, for various reasons. This post will explain some of these reasons and what YOU can do to help stop the regulation. Let's start with the most important thing: Chatcontrol completely misses the point, as criminals rarely use messengers to share material - they're too slow to share large collections of CSAM.

Instead, they encrypt the files and upload them to a completely normal filesharing service. Since the files are encrypted, the service is unable to scan the contents, even if it wants to detect CSAM. Criminals can then simply share links to the content whereever they want [C1,4-6] - the scanning can't/won't hinder them! This alone should be enough to scrap the regulation! If that's enough for you, you can click here to learn what you can do.

But maybe we can catch criminals which DO share CSAM via messengers - there are some out there, and the proposal is directed at them. The regulation differenciates between 3 categories to detect [L2a]:

While there has been lots of research, only detection of known CSAM is really feasible, and even that comes with issues. In the next posts, I'll explain some technical details why, skip ahead to the summary if that's not your thing.

How detection would work

Detection of Known CSAM

Known CSAM images are the easiest to detect - still, it's not an easy task. A computer cannot simply compare an image like a human can and say if they're the same or not. It could compare all pixels, but that would be slow AND you'd need to have CSAM stored whereever the code which is doing the comparison run. Such a comparison would also only work as long as the image is not modified at all. If the image is resized, brightened or blurred this detection won't work.

To fix this, so-called perceptual hashing is used. The idea is simple: Split an image into different areas and calculate a value for each area. This list of values for an image is called a perceptual hash. To compare images, calculate the difference between the values of the two hashes. If the difference is below a value (the detection threshold), the images are likely the same. Now the problem is: How to choose the threshold where you say the images are the same?

Two approaches:

- Set threshold high. More modified CSAM will be detected (high recall). But set the threshold too high, and two unrelated images would be considered the same, in practice meaning a harmless image would be detected as CSAM. Or:

- Set threshold low, so there are less false positives (high precision). Too low, and you'll run into the same issue as when comparing pixels - images which have been slightly modified are not considered the same, not detecting known CSAM.

You can learn more about precision/recall here. For the detection of CSAM, there is no choice but to optimize for high precision: A low precision would mean many false positives. As there far are more normal messages than messges than messages containing CSAM, the amount of false postives would quickly drown out the true positives. Luckily, perceptual hashing is just precise enough. How precise exactly?

A well-known perceptual hashing algorithm is Microsofts PhotoDNA algorithm [T1a]. It's creator claims the chance of misidentification on a big analysis is about 1 in 50 billion [T1b]. While this is very impressive, such low error probabilities are an absolute must for reliably detecting CSAM. Take (as an example) WhatsApp: Assuming 100 billion messages per day [T2], even at these odds, there'll still be about 2 false positives PER DAY, ONLY for WhatsApp.

Client Side Scanning issues

So yes, image comparison is practical, AS LONG AS YOU INCLUDE HUMAN REVIEW. There are other issues, but to detect known CSAM, the accuracy is not really in question. An important question is: Where does the image get analyzed? On a central server? You'd have to throw out end-to-end encryption (E2EE). Analyzing on the users devices is the only solution to technically keep E2EE intact, but why would you trust criminals to use unmodified devices which scan for CSAM?

There are also concerns that PhotoDNA may be reversible, so distributing hashes for detection would be equivalent to distributing CSAM [T3]. If reversible, it'd also be possible to intentionally create images which are falsely detected as CSAM, then flood the detection system with it, making detection of real CSAM a lot more difficult. If the above issues are resolved, we MAY be able to reliably detect known CSAM. However, finding new CSAM is far less precise...

Detection of Unknown CSAM

When detecting unknown CSAM, the previous issues apply AND there are two connected addtional issues: AI training and accuracy. To detect new material, an AI is trained by "looking at" lots of CSAM so it can learn what CSAM looks like - note that hashes are not enough, it needs to be actual CSAM. This means that if only the new EU center is allowed access to CSAM, only the EU center will be able to train an AI which detects new CSAM! Will they get it right?

As a rule of thumb: The better the AI works, the worse we can currently explain it's behavior [T4]. This means we will not be able to be sure the AI learned "the right way" to detect CSAM. And there's many possibilities to make false decisions: As an example, an AI cannot simply rely on detecting images of naked children, as that could probably lead to false positives when parents send photos of their children at the beach or in the bathtub to the grandparents.

Even if the AI can detect actual porn with people that look underage, this can still lead to false positives - there have been HUMAN mistakes about this [C2]. So how could an AI correctly classify CSAM vs. no CSAM if not even humans are able to? It's a difficult problem, and there WILL be many false positives. Even if the error rate was just 1% (in practice it's higher), this would mean many 100s of millions of messages are falsely reported EVERY DAY.

Grooming detection

For grooming detection: I haven't found many details, but it seems even less advanced. The european comission says that Microsofts claims to have a technology with an accuracy of 88% [L2b], meaning a 12% error rate (it's unclear if the commission confused it with precision, but that would indicate low recall, which makes sense). In 2020, Microsoft presented their tool. Watch the presentation [T1c]: It's clear that it's not ready for automated large-scale detection.

The tool consisted (consists?) of simple text matching rules (for programmers: RegExs). It

- is intended to help human moderators

- is not intended to run in real-time

- not for law enforcement

- only supports english! [T1c]

Adapting the detection techniques to other languages will, of course, take time. It's looking for specific phrases, so it'll probably have a high precision, but low recall. Still, sexting adults are probably going to trigger false positives.

The tool is also not intended for end-to-end encrypted messages [T1c]. Once the rules for matching text are known (they HAVE to be on-device if you want to keep end-to-end encryption), criminals could just switch to different phrases (or even write bots to flood the report system)! Even if detection works well, as it only works on text, it'll be trivial to bypass: Ask the kid to join a discord call, send voice messages, send images containing text etc. etc. (in theory detection requirements apply to all content, but given how poorly Speech-To-Text works, just imagine it as a grooming detection where the software misinterprets about every 4th word and guess how that would affect the false postive rate)

Summary of technical aspects

It's possible to detect known CSAM, but that's about all you can reliably detect. When scanning for unknown CSAM even a low error rate of a few percent will lead to billions of messages being falsely reported EVERY DAY. Grooming detection is at best a protoype and relies on human moderation. Not only can detection be bypassed, it'll also be possible for criminials to deliberately cause false positives to disrupt investigations.

Working chatcontrol won't help

The swiss police already receives various automated reports and states that in about 87% of cases, they are useless [C3] - what will the police do if this number increases to 99% or even higher due to false positives?

Also, again, scanning messages will not reveal anything if criminals encrypt their files, upload them to a hosting service and share links, as they are already doing [C1,4-6]. But even assuming the police had a magical technology that has a 100% accuracy for all reports, would they care about reducing distribution of CSAM?

A group of german journalists investigated and found that german police, after shutting down a CSAM sharing forum, didn't inform the abused filesharing services that they were hosting CSAM, so it remained online. After police had taken down one forum, the vistors of the forum simply switched to posting the old, still working download links & passwords on a different forum. Upon request by the journalists, the services were eager to remove the CSAM [C4].

In different cases around the world [C5, C6] (yes, multiple) police went even further: They found & arrested the server admin, then continued to run the servers, actively participating in the distribution of CSAM & hacking (ab)users in order to find them. In one case, australian police ran a CSAM forum for 6 months (!) instead of shutting it down [C6].

Why am I telling you about these cases? They show:

- Once again, criminals rely on niche anonymous forums, not messengers. These cases wouldn't have been any different with the regulation in place. Forum providers won't care, and filesharing services won't be able to scan encrypted content

- Police does not work towards reducing the spread of CSAM, in some cases even increasing it

- The surveillance of messengers was/is not necessary for successful investigations

But why does the police not remove CSAM or even actively distribute it? A plausible explanation is that instead of searching for links and asking services to remove known CSAM, police prioritizes trying to find & rescue victims by analyzing newly gathered CSAM. In 2020 europol had a backlog of more than 40.000.000 CSA images to analyze [T1d]. Implementing the chatcontrol regulation and surveilling messengers would thus simply expand the backlog and not help the victims of CSA!

![Slide from a europol presentation [T1d]. Text "50 million Unique images and videos of child sexual exploitation and abuse". Below the text, a progress bar stating "Only 20% examined"](/images/chatcontrol/victims.jpg)

But assuming we have criminals using messengers AND there's a magic technology AND police has the time and motivation to delete CSAM - great, we reduced the distribution of CSAM by a small fraction. Now what? Well, just because less CSAM is distributed online, doesn't mean CSA stops offline - maybe it's reduced, definitely but not stopped. In 4/5 cases, children are abused by someone close to them [L2c]. To actually catch abusers, you need police, not algorithms!

But lets further assume just reducing the distribution of CSAM was our goal which we now achieved. Hooray! Since we are assuming magic technology and competent police, there is only a seemingly minor downside: We established a precedent justifying mass surveillance. But hey, we achieved that goal and the technology will never be (ab)used for other purposes, right? Right?!? Well, to quote a proverb:

"The road to hell is paved with good intentions"

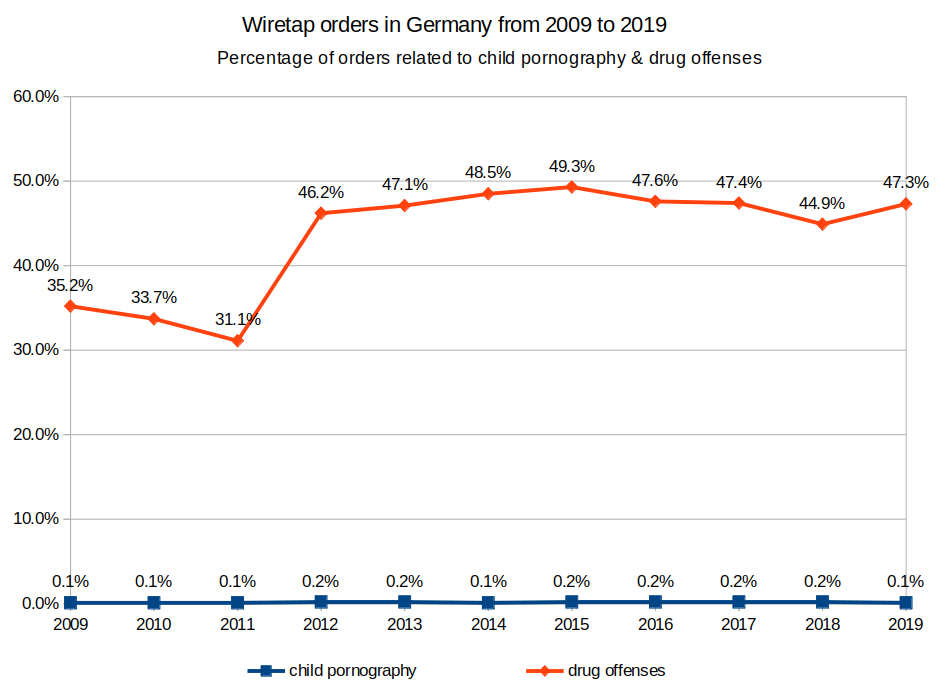

As an example, in germany the ability for police to wiretap was introduced to investigate serious crimes such as production of child pornography. In reality, around 40% of wiretap orders are used for prosecution of drug-related crimes, while only about 0.1-0.2% are used for investigations related to child pornography [C7]. But that's germany, it might be different for the regulation. Maybe I'm just falling for the slippery slow fallacy!

Turns out there's no slippery slope fallacy here: Even before the regulation is passed, plans have already been announced to detect terrorist content [C8], which would very likely be realized using the technologies specified by the chatcontrol regulation. Article 47 of the regulation allows the european commission to change various technological aspects without approval of the parliament [L1a]! What will follow next, detection of copyright infringement?!?

An important thing to realize is that once the technology is in place & working, prohibiting any content becomes easy: To ban an image, add it to the list of hashes of known CSAM. To ban text, add another rule to match text which you don't want to be shared. This won't stop criminals or nerds (they can just use software disobeying the monitoring rules), but it WILL stop the flow of information on mainstream platforms. Look at China to see how this can be abused [C9].

Even if the regulation is never expanded, it remains a broad violation of privacy rights [L3-5]. Multiple experts (including an ex-judge from the european court of justice [L3]) have said that this regulation violates the european charta of human rights and will likely be overturned by the european court of justice. However, until this happens, the regulation will already have done a lot of damage.

To summarize: This regulation will not help stopping distribution of CSAM. At best it will lead to flooding of europol and local police with real, or (more likely) false positive CSAM. It will establish a system of mass surveillance which impacts regular citizens, not the criminals it intends to stop. In the process it undermines end-to-end encryption. The established system is open to abuse and will be further expanded in the future.

In short, the chatcontrol regulation is indisproportionate, ineffective, illegal. It is a regulation that absolutely MUST. NOT. PASS. So what can you do help to stop it?

What you can do

There are two things you can definitely do right now:

- Share this post and/or boost this thread on mastodon to inform others

- Tell the european commission you are not happy about this regulation here

Write right now! There are only about 200 comments (almost all negative) so far, your post could make a difference!

Other things you could do:

- are there demonstrations planned in your area? Participate or organize your own!

- participate in online discussions using the hashtag #chatcontrol (or #chatkontrolle for german)

- if there is an uncritical public mention (e.g. on radio) of the regulation, write to the people responsible - you might get to change their/present your view

- sign a petition:

If you know more, please let me know and I'll add them to the list.

In any case, what you want to do is build up public pressure on your representatives. Write to them, write to media. This worked well in the case of germany: The minister of the interior Nancy Faeser was initially in favor of the regulation, but quickly backpedaled after massive criticism. Let's make sure the same also happens in other countries!

To stay informed, in the fediverse you can follow:

- @echo_pbreyer@chaos.social who posts updates about #chatcontrol from the parliament & has an informative webpage about it here (german version here)

- @chatgeheimnis@chaos.social for information about demonstrations in german-speaking countries

If you have a recommendation, please let me know and I'll add them to the list.

Sources

Technical Details/Stats

- T1: https://youtu.be/adY_uWfs90E

- T2: In 2020 the Head of WhatsApp claims 100bn messages are transferred/day nitter alt

- T3: Details about PhotoDNA & how it may be reversible:

- T4: Explainable AI is still an active field of research of DARPA

Case Study/Criminal Cases

- C1: https://saferinternet4kids.gr/wp-content/uploads/2021/02/1-s2.0-S0267364920300455-main.pdf

- C2: https://reason.com/2010/05/03/porn-star-saves-man-from-incom/ (paywall, archived without paywall here)

- C3: (in german, paywalled) https://www.tagesanzeiger.ch/bruessel-erlaubt-ueberwachung-privater-mails-391994232965, partial text here (nitter alt)

- C4: In english, in german

- C5: https://en.wikipedia.org/wiki/Playpen_(website)

- C6: https://www.vice.com/en/article/mg79nb/australian-authorities-hacked-computers-in-the-us / https://www.csoonline.com/article/3108412/aussie-cops-reportedly-hacked-us-tor-users-during-child-porn-probe.html

- C7: https://tutanota.com/blog/posts/eu-csam-scanning/ + not the same but related (german): https://netzpolitik.org/2018/bka-dokument-polizeibehoerden-wollen-staatstrojaner-vor-allem-gegen-drogen-einsetzen/

- C8: (german) https://netzpolitik.org/2022/vorratsdaten-und-entschluesselung-rat-fuer-justiz-und-inneres-will-mehr-ueberwachung/

- C9: https://citizenlab.ca/2018/08/cant-picture-this-an-analysis-of-image-filtering-on-wechat-moments/

Legal documents or arguments

- L1: The proposal for the regulation

- a: Search for "Article 47" or goto page 80 of the pdf version

- L2: Document impact report:

- L3: Legal opinion of former european court of justice judge

- L4: (german) article by german consitutional scholar about how the regulation is incompatible with EU law

- L5: Paper discussing harms